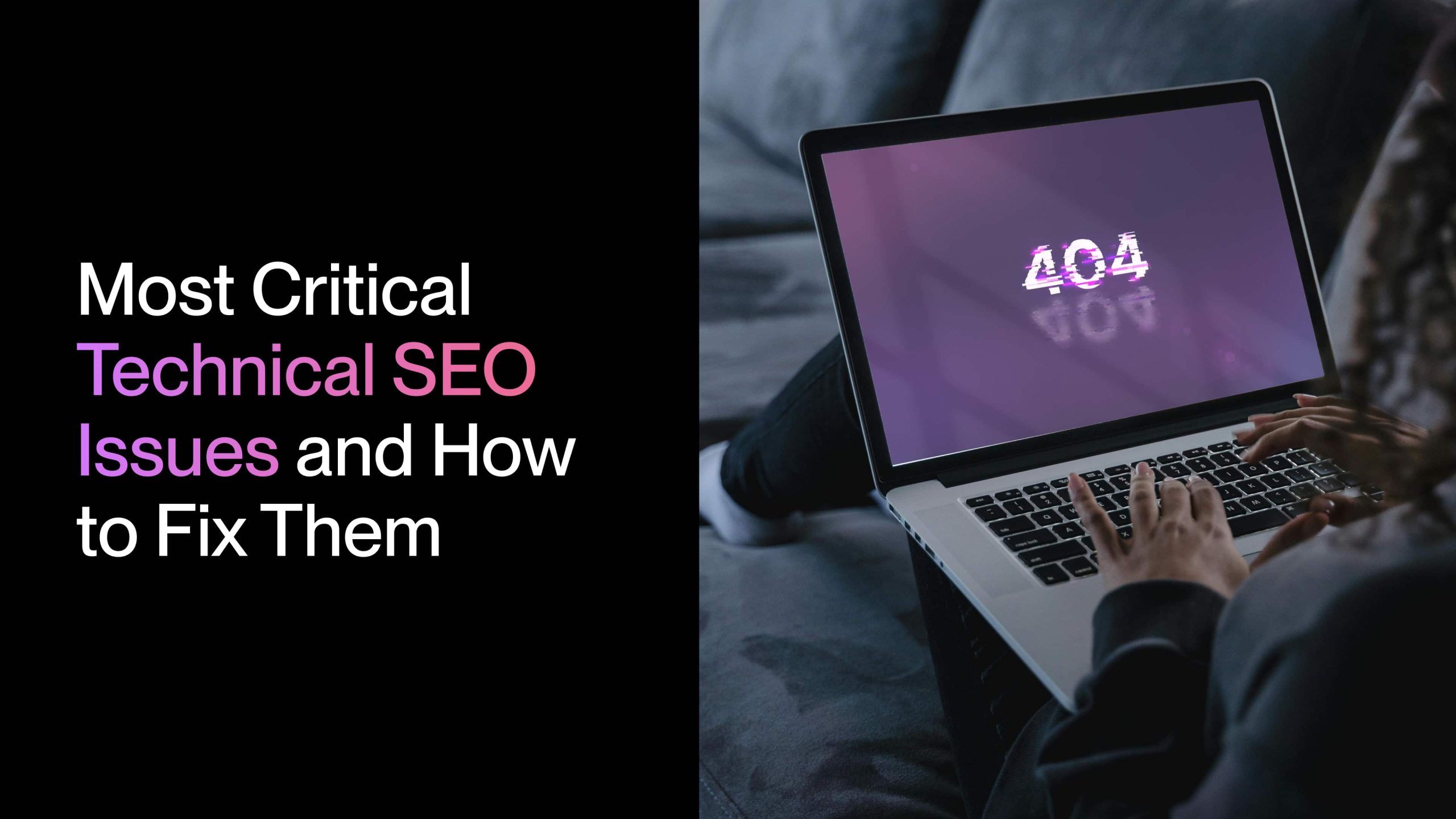

Can Google actually find and rank your pages? That depends on factors like crawlability, canonicals, and Core Web Vitals.

At Ninja Promo, we’ve improved technical SEO performance for hundreds of our clients — from enterprise ecommerce sites to hyper-growth SaaS brands.

This guide explores the 10 most critical technical SEO issues that directly affect search performance.

You’ll learn how to identify and fix large-scale, high-impact problems that deserve top priority — rather than minor fixes like missing image alt tags or isolated 404 errors.

1. Pages Not Indexed (Crawlability / Indexability Failures)

A web page that isn’t indexed can’t generate visibility or traffic — and indexation failures are one of the most critical technical SEO errors we come across.

Before Google can rank your page, Googlebot must first crawl it and then receive permission to add it to the index.

When something blocks either step, your page becomes invisible to search.

Source: Semrush

This is one of the basic SEO principles, where issues are often caused by:

- Your robots.txt file might contain rules that block Googlebot from accessing important pages.

- Meta robots tags with a noindex directive tell Google to crawl but not index the page, which developers sometimes add during staging and forget to remove.

- A missing or outdated XML sitemap makes it harder for Google to discover your pages, especially on large sites with internal linking gaps.

- Crawlability problems like server timeouts, redirect errors, or login walls also stop bots from reaching your site’s content.

“The most common reasons pages fail to get indexed include noindex tags or directives, accidental blocking in the robots.txt file, and a lack of internal links when pages are created in isolation and not integrated into the site structure.”

Vadzim Z, Head of SEO at Ninja Promo

✅Pro tip: Did you know that many technical SEO best practices also apply to LLM SEO?

How to Fix It

Start by checking which pages are not indexed using Google Search Console‘s Indexing report, then work through each error type.

Open Google Search Console, go to “Indexing” then “Pages,” and filter by “Not indexed” to see which URLs are affected and why.

For pages blocked by robots.txt, edit the file to remove or adjust the disallow rules, then request indexing in Google Search Console.

For pages with noindex tags, check the HTML head section and remove the meta robots noindex directive, or ask your developer to remove it from the CMS template.

Submit an updated XML sitemap through Google Search Console under “Indexing“, then “Sitemaps” to help Google see your important pages.

Use the URL Inspection tool in Google Search Console to test individual pages and confirm they show “URL is available to Google” after your fixes.

2. Slow Core Web Vitals (LCP, CLS, INP)

Core Web Vitals measure how fast your pages load, how stable they feel, and how quickly they respond to user clicks.

Google uses these user experience signals as ranking factors — and poor scores can hurt your search visibility.

Here’s what each Core Web Vitals metric tracks:

- Largest Contentful Paint (LCP) measures how long it takes for the biggest visible element to load (usually your hero image or main headline).

- Cumulative Layout Shift (CLS) measures how much elements shift around while the page loads, which can frustrate users trying to click or scroll.

- INP (Interaction to Next Paint) measures how fast the page responds when someone taps a button or types in a field.

Source: SEOmator

Several common technical SEO issues cause poor Core Web Vitals scores, including:

- Large images that haven’t been compressed take longer to download, which slows your LCP score.

- Images loading all at once forces the browser to handle everything simultaneously, even content that visitors haven’t scrolled to yet.

- Bloated CSS and JavaScript files contain unnecessary code that makes the browser process too many elements before anything displays on screen.

- Elements like ads, videos, or images that load without reserved space cause the page to shift around, making users accidentally click the wrong element.

- Slow content delivery from your server makes pages feel sluggish when the server takes too long to respond.

“In our observations, LCP has the greatest impact on rankings, since slow loading of the main content strongly affects user behavior. Users are unlikely to wait more than a few seconds for a page to load, and returning to search results is a strong negative signal that the page doesn’t satisfy the query, which, at scale, can hurt rankings.”

Vadzim Z, Head of SEO at Ninja Promo

How to Fix It

Start by running a Core Web Vitals test using Google PageSpeed Insights or the Google Search Console Core Web Vitals report.

Then, address each issue based on what’s dragging your scores down. For example, you can:

- Compress your images using tools like ShortPixel, and add responsive images that adjust size based on the visitor’s screen.

- Implement lazy loading so images only load when users scroll to them.

- Use JavaScript minification (e.g., via Terser) and CSS optimization tools to remove unnecessary code, extra spaces, and comments from your files.

- Add width and height attributes to all images, ads, and video embeds so the browser reserves space for them before they load.

- Improve content delivery by using a CDN (content delivery network) that serves your files from servers closer to your visitors. If server response times are still slow, you might want to upgrade your hosting plan or switch to a faster provider.

3. Duplicate Content Without Proper Canonicalization

When the same content lives on multiple URLs, your pages end up competing against each other — backlinks and ranking signals get split instead of flowing to one strong page.

Canonical tags fix this by telling Google which version to index.

Source: Semrush

When they’re missing, Google has to guess which page to rank, and your authority gets diluted across duplicates.

Here’s how duplicate content typically gets created:

| Cause | Example | What happens |

| WWW vs non-WWW | example.com and www.example.com | Same page accessible at two different addresses. |

| HTTP vs HTTPS | http://site.com and https://site.com | Both versions load instead of redirecting to one. |

| Trailing slashes | /page and /page/ | Two URLs serve identical content. |

| URL parameters | /shoes and /shoes?color=red | Filters or tracking codes create extra indexable URLs. |

| Pagination | /blog/page/1, /blog/page/2 | Pages may share content or confuse Google without proper setup. |

| CMS behavior | /product and /category/product | The platform creates multiple paths to the same page. |

“Typically, the greatest damage comes from CMS-generated duplicates—especially on large ecommerce sites — because they scale quickly and create large volumes of low-quality duplicate content.”

Vadzim Z, Head of SEO at Ninja Promo

How to Fix It

To fix technical SEO issues with canonicals and duplicate content, audit your site for duplicate URLs using a crawling tool like Screaming Frog and implement necessary changes.

Here’s how to fix each issue we mentioned above:

- WWW vs non-WWW: Pick one version and 301 redirect the other. You can do this in your server settings or .htaccess file.

- HTTP vs HTTPS: Set up a sitewide 301 redirect from HTTP to HTTPS. Make sure your SSL certificate is installed and working.

- Trailing slashes: Decide if you want to use “/page” or “/page/” and stick with it. Redirect the format you don’t use.

- URL parameters: Point canonical tags to the clean URL without the parameters. You can also configure this in Google Search Console under “URL Parameters.”

- Pagination: Add self-referencing canonicals to each page in the series. If pages have mostly the same content, add canonicals to connect them all to page one.

- CMS behavior: Run a crawl to find duplicate paths, then either add canonicals to your preferred URL or turn off alternate URL structures in your CMS.

4. Misconfigured Canonicals (Self-referencing Errors, Wrong Targets)

That said, having a canonical tag isn’t enough; it also needs to point to the correct location.

If your tag sends Google to a broken page, a redirect, or something completely unrelated, Google will either follow that wrong signal or ignore it altogether.

Here are the most common issues you might encounter:

| Misconfiguration | Example | What goes wrong |

| Canonical to a 404 page | Page points to /old-url that no longer exists | Google ignores the canonical and picks a version itself. |

| Canonical to a redirected URL | Your page’s canonical points to /old-url, but /old-url redirects to /new-url | Creates confusion and wasted crawl budget as Google follows the chain. |

| Canonical chains | Page A → Page B → Page C | Google may stop following after one hop and index the wrong version. |

| HTTP canonical on HTTPS page | HTTPS page canonicals to http://example.com/page | Sends mixed signals about which protocol is preferred. |

| Relative instead of absolute URL | canonical=”/page” instead of “https://example.com/page“ | Can break on subdomains or be misinterpreted by crawlers. |

| Multiple canonical tags | Two different canonical tags in the HTML head | Google doesn’t know which to trust and may ignore both. |

| Canonical to unrelated page | /blog-post canonicals to /homepage | All ranking signals go to the wrong page. |

“Canonical tags only pose a significant risk when they’re implemented incorrectly. Ranking issues typically arise when canonicals point to irrelevant, non-indexable, or conflicting URLs, causing ranking signals to be misattributed and the wrong pages to be indexed.

The fastest way to detect ignored canonicals is through a site crawl (using Screaming Frog or similar tools), which reveals inconsistencies between canonical directives, page status codes, and the URLs Google actually indexes.”

Olivia G, SEO Specialist at Ninja Promo

How to Fix It

Audit your canonical tags using Screaming Frog or Semrush Site Audit to find misconfigurations, then update each tag to point to the correct, live URL.

Here’s how:

- Check that every canonical points to a real page that returns a 200 status code, not a 404 or redirect.

- Update canonicals to use absolute URLs (https://example.com/page) instead of relative paths (/page).

- Remove duplicate canonical tags so each page only has one in the HTML head.

- If your site uses HTTPS, make sure canonical tags also use HTTPS.

- Fix canonical chains by pointing directly to the final destination page.

- Verify canonicals point to relevant pages (e.g., instead of your homepage).

5. Server Errors (5xx, Intermittent Downtime)

Server errors make your pages unavailable for crawling, and repeated failures can cause Google to visit your site less frequently over time.

Such technical SEO errors can tank your rankings in the long run and make your site less trustworthy.

How do you know if this is happening?

Check your server logs or SEO crawl reports for errors that start with 5xx (for example, 500 or 503).

These codes mean your server had a problem and couldn’t load a page that should normally be available:

| Error | What it means |

| 500 Internal Server Error | Something broke on your server, which is often caused by faulty plugins, bad code, or misconfigured files. |

| 502 Bad Gateway | Your server got an invalid response from another server it depends on, which is common with CDNs. |

| 503 Service Unavailable | Your server is overloaded or down for maintenance. |

| 504 Gateway Timeout | Your server took too long to respond, which might happen due to slow databases or poor hosting. |

| Intermittent downtime | Your site works when you check it, but fails when Google crawls it during off-hours. |

“Based on our observations, the impact depends on the scale of the errors. Persistent site-wide 5xx issues can cause an entire site to drop out of Google’s index, while isolated errors usually have a more limited effect. Even then, affected pages should be fixed quickly and reindexed to avoid lasting crawl or ranking issues.”

Olivia G, SEO Specialist at Ninja Promo

How to Fix It

To fix server errors, set up server monitoring and try adding these fixes:

- Use a tool like UptimeRobot to alert you when your site goes down.

- Open “Indexing” > “Pages” in Google Search Console to spot crawl errors Google has already found.

- Look at your server logs using a tool like the Log File Analyzer — errors might spike at certain times or when traffic increases.

- Reach out to your hosting provider if 503 or 504 errors keep happening — you might need more server resources or better caching.

- Disable various plugins on your site one by one to see if one of them causes 500 errors.

- Set up a CDN (content delivery network) like Cloudflare to reduce the load on your main server and add an extra layer of protection.

6. Redirect Chains & Loops

Long redirect chains can also slow down your pages and waste the crawl budget Google gives your site.

A redirect tells browsers and search engines: “This page moved, go here instead.” A chain forms when that destination also redirects to another URL — so visitors bounce through multiple stops before reaching the actual page.

Source: Conductor

For example, someone clicks a link to /old-page. Your server sends them to /new-page. But /new-page also redirects to /final-page.

That’s a chain with two hops.

Googlebot follows along, but ranking signals get weaker at each step.

Here’s what typically causes these problems:

- Old migrations that were never cleaned up. You moved URLs once, then moved them again later — but the original redirect still points to the middle destination instead of the final one.

- HTTPS and www changes layered together. For example, the http version redirects to https, which redirects to www, adding a trailing slash.

- CMS platforms auto-creating 301 redirects every time you change a URL slug. In this case, you might accidentally build a chain without realizing it.

“Redirect chains usually accumulate gradually. They most often result from URL changes, site migrations, or content consolidation — especially on ecommerce sites where redirects are frequently created for discontinued or missing products. The impact is greatest on large ecommerce sites with thousands of URLs.”

Vadzim Z, Head of SEO at Ninja Promo

How to Fix It

Find your redirect chains, then update each redirect to skip the middle steps and point directly to the final URL.

For example, you can:

- Run your site through Semrush Site Audit or Screaming Frog. Both tools show you a list of redirect chains and how many hops each one has.

- For each chain, update the first redirect to point to the final destination. Skip everything in between.

- If you’re using WordPress, check a plugin like Redirection to make these changes. You can also hire a developer to update the server settings or .htaccess file.

- Test by clicking your old URLs. If you land on the right page without the address bar jumping around, you’re good to go.

7. Incorrect Mobile Rendering / Mobile-First Issues

Google prioritizes the mobile version of your site when deciding rankings — so if content is broken or missing on mobile, your visibility might be affected.

This is called mobile-first indexing.

By default, Google looks at your mobile pages first and treats that version as the primary one.

If content is visible on desktop but hidden or broken on mobile, Google may ignore it when ranking your pages.

Source: Sitechecker

“Even if everything looks fine on the desktop, the mobile version must be tested carefully. With mobile-first indexing, Google only indexes what it can render on mobile. Common issues include blocked JavaScript or CSS, content hidden behind interactions, broken lazy loading of key elements, JavaScript rendering errors, reduced mobile content compared to desktop, viewport-based layouts that push content out of view, and accidental noindex tags on mobile pages.”

Olivia G, SEO Specialist at Ninja Promo

We typically encounter these common issues:

- Content you can see on desktop but not on mobile. For example, some sites hide text behind “click to expand” sections on mobile to save space. If those sections don’t work properly — or if Google can’t click them — that content disappears from indexing.

- JavaScript rendering not working properly. Your page relies on scripts to load the main content, but something breaks on mobile, and the content never shows up.

- Content overflowing off the screen. Text or images are too wide, so users have to scroll sideways. Google tracks this as a bad user experience signal.

- Buttons jammed together. When links and tap targets are too close, people hit the wrong element, further breaking the mobile experience.

How to Fix It

Check for mobile experience issues using Google Search Console’s Core Web Vitals report, then work through these fixes:

- If content is hidden on mobile, remove the accordion or other elements hiding it.

- For text or images overflowing the screen, check your CSS. Add max-width: 100% to images and make sure containers don’t have fixed pixel widths.

- Add padding or margins to buttons so there’s at least 48 pixels of space between tap targets.

- If JavaScript rendering is the problem, ask your developer about switching to server-side rendering. This delivers the full HTML to Googlebot instead of relying on scripts to build the page.

After making changes, test how your page loads on the actual phone and note improvements.

8. Massive Parameter URL Duplication

URL parameters can create duplicate content pages that affect website performance and make it harder for Google to find your key pages.

Parameters are the bits that come after the question mark in a URL. They’re used for tracking, filtering, sorting, and session IDs:

Source: Semrush

The problem is that each parameter combination creates a “new” URL — even though the page content is basically the same:

| Parameter type | Example | What happens |

| Filters | /shoes?color=red&size=10 | Every filter combo becomes a separate URL. Ten colors times eight sizes leads to 80 URLs for one product category. |

| Sorting | /products?sort=price-low | Same products, different order. Google sees it as a new page. |

| Tracking codes | /page?utm_source=facebook | Marketing tags create duplicates of every page you promote. |

| Session IDs | /page?sessionid=abc123 | Each visitor gets a unique URL, which leads to thousands of duplicates pointing to identical content. |

| Pagination mixed with filters | /shoes?color=red&page=2 | Filters plus page numbers multiply the problem fast. |

On a big ecommerce site, for example, this can create hundreds of thousands of low-value URLs competing with the pages you actually need to rank.

How to Fix It

To avoid parameter duplication, tell Google which parameter URLs matter and stop it from crawling the ones that don’t:

- Add canonical tags to parameter URLs pointing to the clean version — e.g., so /shoes?color=red canonicals to /shoes.

- Block unnecessary parameters in your robots.txt file, especially session IDs and tracking codes.

- For parameters you never want indexed, add a noindex directive using meta robots tags.

- Add a nofollow attribute to internal links that generate parameter URLs, so you’re not passing anchor text value to duplicate pages.

9. Incorrect or Conflicting Hreflang Implementation (For Multilingual/Multiregional Sites)

Incorrect or inconsistent hreflang tags can cause Google to show the wrong language version of your page — or ignore your international targeting entirely.

All of which affects the effectiveness of multilingual SEO.

Hreflang tags tell Google which language and region each page is meant for:

- When they’re set up correctly, a Spanish user in Spain sees your Spanish page, while an English user in the UK sees your English version.

- When they’re broken, Google has to guess — and it often makes mistakes.

Source: Seobility

How to Fix It

Audit your hreflang tags to find missing return links and conflicts with canonical tags, then fix each error.

Here’s how:

- Use Semrush Site Audit to scan for hreflang errors across your site.

- Make sure every hreflang tag has a matching return tag on the target page. If page A references page B, page B must reference page A.

- Check that your canonical tags point to the same page the hreflang is on — not a different language version.

- Use valid ISO language and region codes. For example, UK English must be written as en-GB, because UK isn’t a recognized region code.

- Include an x-default tag to tell Google which page to show when no hreflang matches the user’s location.

✅Pro tip: Learn how to build a powerful SEO localization strategy.

10. Poor JavaScript SEO Implementation

If your site relies on JavaScript to load content, Google might not see that content at all — leaving gaps in indexing.

Here’s the thing: Googlebot can render JavaScript, but it doesn’t always work the way you’d expect.

The crawler has limited resources and a queue of pages to process.

If your JavaScript takes too long to execute, or if something breaks during rendering, Google indexes the page without the content that was supposed to load.

Source: Semrush

There are a few issues that might cause these indexing gaps, such as:

- Client-side rendering loads an empty HTML shell first, then JavaScript fills in the content afterward. Google has to wait for that second step. Sometimes it doesn’t wait long enough, or the scripts fail.

- Content that only appears after user interaction. If text or links only show up when someone clicks a button or scrolls, Googlebot never triggers that action. It only sees what loads automatically.

- Third-party scripts blocking the main content. Analytics, chat widgets, and ad scripts can slow down or break the JavaScript that loads your page content.

- Delayed loading for “above the fold” content. Some sites lazy-load everything, including the main content at the top of the page. Google might crawl before that content appears.

- Heavy JavaScript files that time out. If your scripts are too large or your server is slow, Google gives up before rendering is over.

How to Fix It

Check if Google can actually see your JavaScript content, then switch to a rendering method that doesn’t rely on the crawler executing scripts:

- Use Google Search Console’s URL Inspection tool and click “Test Live URL” then “View Tested Page” to see what Googlebot renders. Compare it to what you see in a browser.

- If critical content is missing, consider server-side rendering, which delivers fully-built HTML to Google instead of requiring JavaScript to run first.

- Adding dynamic rendering, where you serve pre-rendered HTML to bots while regular users still get the JavaScript version.

- Move important content out of interactive elements. If users need to click something to see it, Google probably can’t see it either.

- Audit your third-party scripts and remove anything that’s not essential for the initial page load.

✅Pro tip: Explore the key SEO statistics to prep your strategy.